Search for a new JSON: jsoniter

Why did nobody tell me about this?

Both of my regular readers may be wondering what happened after my last blog about potentially forking encoding/json. Did I fork it? No I didn’t.

This is partly because I discovered https://github.com/json-iterator/go, which looks like it is the JSON library I was looking for. And partly because I spent my enthusiasm writing plenc - a serialisation library based around the protobuf wire protocol that bases message definitions on Go structures instead of .proto files. But I’ll save talking about plenc for a future post.

But today we’re going to talk about jsoniter. jsoniter is a fabulous JSON library that goes out of its way to discourage you from using it. For one thing it isn’t really clear about its name. Is it json-iterator or jsoniter? And if you look at github it looks like it’s ‘just’ a Go version of an older Java library. And Java == bad right? And I don’t want to iterate my JSON, I want to encode and decode it.

If you move past that and start reading the docs there are some heavy hints that it does what we want.

Package jsoniter implements encoding and decoding of JSON as defined in RFC 4627 and provides interfaces with identical syntax of standard lib encoding/json. Converting from encoding/json to jsoniter is no more than replacing the package with jsoniter and variable type declarations (if any). jsoniter interfaces gives 100% compatibility with code using standard lib.

So it looks very much like we can just drop in jsoniter and we’ll get better performance. Does it really offer the performance and convenience I’m after? I’ll write some benchmarks using the kind of structs we use at Ravelin. And compare encoding/json (from Go 1.14) with jsoniter.

Benchmark

Here are the benchmarks. Apologies for the length. We’ll test with a struct that contains fields that have custom Marshalers and Unmarshalers. These are the types of fields that we’ve had performance issues with using encoding/json.

package badgo

import (

"encoding/json"

"testing"

"time"

jsoniter "github.com/json-iterator/go"

"github.com/unravelin/null"

)

type myteststruct struct {

A null.Int

B time.Time

C time.Time

D null.String

}

func BenchmarkEncodeMarshaler(b *testing.B) {

m := myteststruct{

A: null.IntFrom(42),

B: time.Now(),

C: time.Now().Add(-time.Hour),

D: null.StringFrom(`hello`),

}

b.Run("encoding/json", func(b *testing.B) {

b.ReportAllocs()

b.RunParallel(func(pb *testing.PB) {

for pb.Next() {

if _, err := json.Marshal(&m); err != nil {

b.Fatal("Encode:", err)

}

}

})

})

b.Run("jsoniter", func(b *testing.B) {

b.ReportAllocs()

var json = jsoniter.ConfigCompatibleWithStandardLibrary

b.RunParallel(func(pb *testing.PB) {

for pb.Next() {

if _, err := json.Marshal(&m); err != nil {

b.Fatal("Encode:", err)

}

}

})

})

}

func BenchmarkDecodeMarshaler(b *testing.B) {

m := myteststruct{

A: null.IntFrom(42),

B: time.Now(),

C: time.Now().Add(-time.Hour),

D: null.StringFrom(`hello`),

}

data, err := json.Marshal(&m)

if err != nil {

b.Fatal(err)

}

b.Run("encoding/json", func(b *testing.B) {

b.ReportAllocs()

b.RunParallel(func(pb *testing.PB) {

var n myteststruct

for pb.Next() {

if err := json.Unmarshal(data, &n); err != nil {

b.Fatal(err)

}

}

})

})

b.Run("jsoniter", func(b *testing.B) {

b.ReportAllocs()

var json = jsoniter.ConfigCompatibleWithStandardLibrary

b.RunParallel(func(pb *testing.PB) {

var n myteststruct

for pb.Next() {

if err := json.Unmarshal(data, &n); err != nil {

b.Fatal(err)

}

}

})

})

}

And the results? Well, jsoniter is a little faster but has more allocations for both encoding and decoding. Not the result I was hoping for. But we didn’t really expect things to be quite that easy.

EncodeMarshaler/encoding/json-16 342ns ± 8%

EncodeMarshaler/jsoniter-16 252ns ± 4%

DecodeMarshaler/encoding/json-16 506ns ± 2%

DecodeMarshaler/jsoniter-16 472ns ± 2%

name allocs/op

EncodeMarshaler/encoding/json-16 6.00 ± 0%

EncodeMarshaler/jsoniter-16 7.00 ± 0%

DecodeMarshaler/encoding/json-16 9.00 ± 0%

DecodeMarshaler/jsoniter-16 16.0 ± 0%

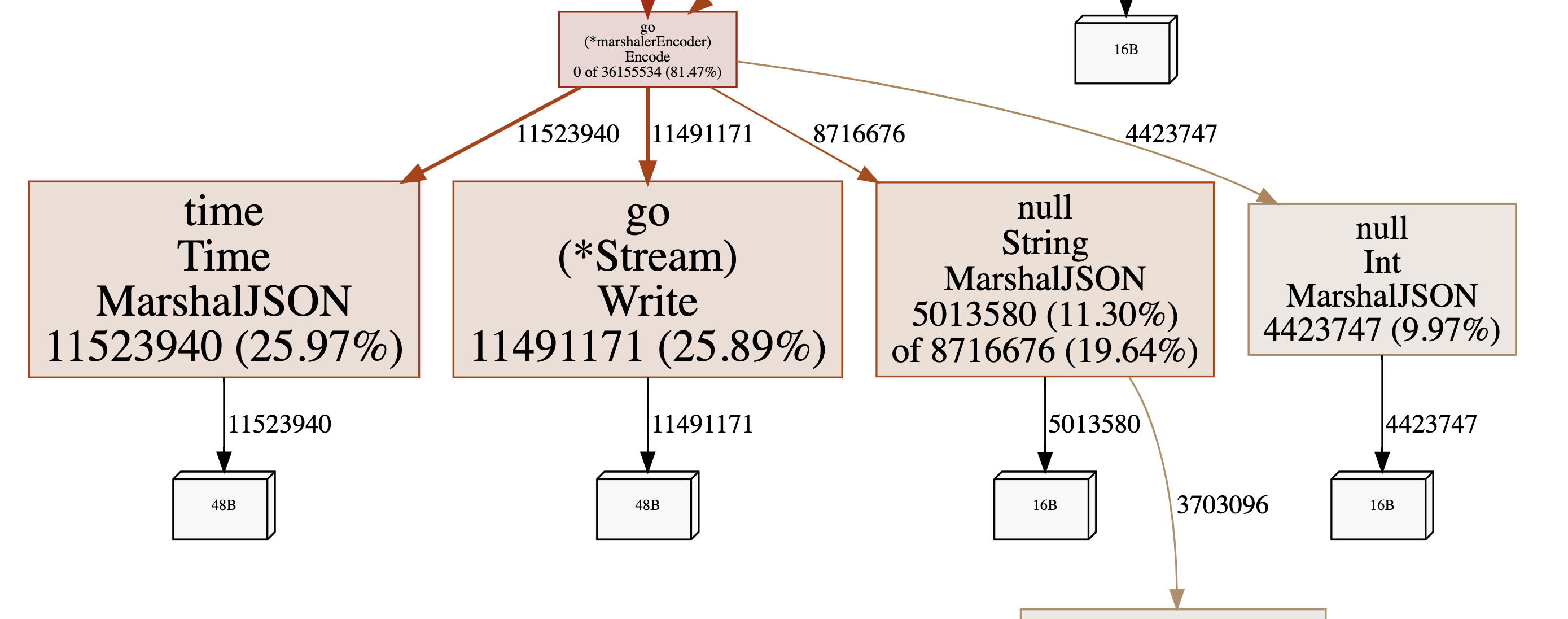

Let’s try to improve things. To improve we need to know where these allocations are coming from. We can quickly run a profile.

go test -bench 'BenchmarkEncodeMarshaler/jsoniter' -run ^$ -memprofile mem.prof

go tool pprof -http :6060 post.test mem.prof

Switching to the alloc_objects samples we can quickly see where most of the allocations are happening. It’s in our old favourite (Un)Marshaler methods. I’m going to hope there’s a jsoniter way of dealing with these that we can apply with a little effort.

If you stare at the documentation extremely hard you’ll eventually spot that jsoniter has a very powerful mechanism for writing Encoders and Decoders for any type. These codecs don’t have to be methods of the types being encoded/decoded, which means you can create custom marshallers for types that are parts of other packages. So you can directly create custom marshallers and unmarshallers for time.Time for example. This is a complete game-changer. It allows total flexibility.

Here’s a codec for time.Time that implements both jsoniter.ValEncoder and jsoniter.ValDecoder interfaces.

type timeCodec struct{}

func (timeCodec) IsEmpty(ptr unsafe.Pointer) bool {

return (*time.Time)(ptr).IsZero()

}

func (timeCodec) Encode(ptr unsafe.Pointer, stream *jsoniter.Stream) {

t := (*time.Time)(ptr)

var scratch [len(time.RFC3339Nano) + 2]byte

b := t.AppendFormat(scratch[:0], `"`+time.RFC3339Nano+`"`)

stream.Write(b)

}

func (timeCodec) Decode(ptr unsafe.Pointer, iter *jsoniter.Iterator) {

switch iter.WhatIsNext() {

case jsoniter.NilValue:

iter.Skip()

*(*time.Time)(ptr) = time.Time{}

return

case jsoniter.StringValue:

ts := iter.ReadStringAsSlice()

t, err := time.Parse(time.RFC3339Nano, *(*string)(unsafe.Pointer(&ts)))

if err != nil {

iter.ReportError("decode time", err.Error())

return

}

*(*time.Time)(ptr) = t

default:

iter.ReportError("decode time.Time", "unexpected JSON type")

}

}

You register the codec with jsoniter as follows. The name you register with is the reflect2 type name - reflect2.TypeOf(time.Time{}).String().

jsoniter.RegisterTypeEncoder("time.Time", timeCodec{})

jsoniter.RegisterTypeDecoder("time.Time", timeCodec{})

Being able to create encoders and decoders for third-party types is a huge improvement over the json.Marshaler and json.Unmarshaler in the standard encoding/json. It gives you much more flexibility. You can improve on the marshalling of standard library types like time.Time without needing to make changes to the library.

Anyway, back to performance. Once I’ve created codecs for time.Time, null.String and null.Int the benchmarks improve considerably. Now jsoniter looks much more attractive than encoding/json.

name time/op

EncodeMarshaler/encoding/json-16 343ns ± 9%

EncodeMarshaler/jsoniter-16 160ns ± 3%

DecodeMarshaler/encoding/json-16 506ns ± 1%

DecodeMarshaler/jsoniter-16 172ns ± 4%

name allocs/op

EncodeMarshaler/encoding/json-16 6.00 ± 0%

EncodeMarshaler/jsoniter-16 2.00 ± 0%

DecodeMarshaler/encoding/json-16 9.00 ± 0%

DecodeMarshaler/jsoniter-16 1.00 ± 0%

jsoniter’s documentation is incomplete and it has a confusing name, but if you fight your way through that you’ll find a high-performance, high-quality JSON library that’s a drop-in replacement for encoding/json without needing any code generation. So if I spend any more energy on JSON in Go I think I’ll try contributing documentation to jsoniter.