I stan clearing maps, no cap

A better story than that tortuous title suggests!

Recently at work I ran into an interesting performance problem. We have a data processing job that is basically a series of count-distinct operations. It uses a map to help work out the distinct sets of values.

In the past we’ve done some performance work on this, and we’ve arranged things so that we re-use the map between operations.

Re-using the map was great! Calling clear on the map and re-using it was much

faster than creating a new map each time.

So what’s the problem?

Over time the job has taken longer and longer to complete. This is expected because the data it runs over just keeps getting bigger. But it had gotten to the point where it was becoming a problem. 40 hours of a problem.

Looking at some metrics, the job was pegged at 100% CPU, but throughput dropped significantly at a couple of points over the runs. It didn’t gradually slow down, it was two distinct sharp steps down.

What was happening?

I was able to pull a CPU profile, captured after one of the steps down. It showed a very large amount of time spent clearing maps. Why would that have become slow? Re-using and clearing the maps was previously a performance improvement. Perhaps there’s something about the data that’s causing a problem?

I did some analysis on the data, and eventually decided to work out how many distinct items there would be per operation. It turned out mostly the numbers were as expected - with up to a thousand or so items per task.

But on a couple of occasions we had hundreds of thousands of distinct items for one task. The distribution of items had some very distinct outliers.

Why is that a problem?

Is clearing a map with hundreds of thousands of items slow? We can test that out!

func BenchmarkMapClearing(b *testing.B) {

m := make(map[string]struct{}, 100_000)

for range b.N {

clear(m)

}

}

It turns out that’s very fast.

BenchmarkMapClearing-16 2.743 ns/op

Ah, but what if we use the map just a little bit? And let’s try some different sizes.

func BenchmarkMapClearingWithItems(b *testing.B) {

for _, size := range []int{100, 1_000, 10_000, 100_000} {

m := make(map[string]struct{}, size)

b.Run(strconv.Itoa(size), func(b *testing.B) {

for range b.N {

m["hat"] = struct{}{}

clear(m)

}

})

}

}

BenchmarkMapClearingWithItems/100 144.1 ns/op

BenchmarkMapClearingWithItems/1000 1336 ns/op

BenchmarkMapClearingWithItems/10000 11159 ns/op

BenchmarkMapClearingWithItems/100000 103741 ns/op

Oh.

It’s kind of obvious when you think about it. If the map is empty, there’s nothing to clear. Clear can be fast.

If the map isn’t empty, even if there’s just one item, then it has to iterate over all the cells in the map and empty them. It might not take long in the greater scheme of things, but it does take more time in line with the capacity of the map.

Is clearing a map of each size still faster than allocating a new map each time?

func BenchmarkMapAllocation(b *testing.B) {

for _, size := range []int{100, 1_000, 10_000, 100_000} {

b.Run(strconv.Itoa(size), func(b *testing.B) {

for range b.N {

m := make(map[string]struct{}, size)

_ = m

}

})

}

}

BenchmarkMapAllocation/100-16 512.0 ns/op

BenchmarkMapAllocation/1000-16 5305 ns/op

BenchmarkMapAllocation/10000-16 36463 ns/op

BenchmarkMapAllocation/100000-16 237231 ns/op

Yes, it’s still twice as fast or more to clear the map than to allocate a new one. And that’s true at any size I tested. But it’s much slower to clear a map that can hold 100,000 items than it is to allocate a map for 1000 items.

Our problem is that we have different data distributions that one job needs to cope with.

Let’s restate that again

Now we understand what’s happening. To begin with, our job processes tasks that have our “normal” distribution of items. Perhaps 1000 items per task.

The map capacity is about 1000. We use about 1000 slots in the map each time and it takes about 1.3µs to clear the map.

Then we get a task that has 50,000 items. The map capacity increases to 50,000. We use about 50,000 slots for this one task and it takes about 50µs to clear the map.

Now we continue with our normal tasks. The map capacity stays at 50,000. We use about 1000 slots in the map each time, but it still takes about 50µs to clear the map.

It still takes about 50µs to clear the map. Every time. Up from 1.3µs!

That’s an extra 49µs per task from this point forward, so our throughput for the same CPU usage drops significantly.

Then we get a task with 100,000 items, the map capacity increases to 100,000, it starts taking 100µs to clear the map, and throughput steps down again.

So what do we do?

We’re fortunate enough that at the start of each task we know how many items we’re going to feed into our count-distinct operation. And normally the distinct number is not that much lower.

So the obvious solution is to check the capacity of the map before we clear it for the new task, and if it’s way too big we allocate a new map instead.

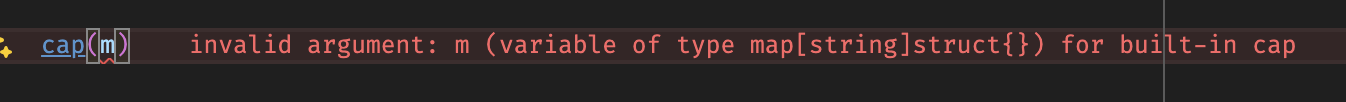

Ah, maps have no cap. https://pkg.go.dev/builtin#cap.

If you’re wondering why, there’s a rejected proposal to add cap for maps here.

The boring ending

In the end I just switched things to use two maps - one tasks with a smaller number of items to process, and one for tasks with a larger number of items to process. Worked fine. If I was feeling more sophisticated I might have used a sequence of sizes, so that each order of magnitude of items has an appropriately sized map.